Should a self-driving car kill its passengers for the greater good for instance, by swerving into a wall to avoid hitting a large number of pedestrians? Surveys of nearly 2,000 US residents revealed that, while we strongly agree that autonomous vehicles should strive to save as many lives as possible, we are not willing to buy such a car for ourselves, preferring instead one that tries to preserve the lives of its passengers at all costs.

Why buy a self-driving car?

Driving our own cars might be a enjoyable pursuit, but it's also

responsible for a tremendous amount of misery: it locks out the

elderly and physically challenged and is the

primary cause of death, worldwide, for people aged 15 to 29.

Every year, over 30,000 traffic-related deaths and millions of injuries, costing close to a trillion dollars, take place in the US alone (worldwide, the numbers approach 1.25 million fatalities and 20 to 50 million injuries a year). And, according to numerous studies, human error has been responsible for at least a staggering 90 percent or more of these accidents.

Autonomous vehicles (AVs) are still years away from being ready for prime time. Once the technology is mature, however, self-driving cars and trucks could prevent a great number of accidents indeed, up to the nine out of 10 that are caused by the human factor with additional benefits like reducing pollution and traffic jams.

"In the future, our goal is to have technologies that can help to completely avoid crashes," Volvo told us in an e-mail. "Autonomous vehicles will be part of this strategy since these vehicles can avoid the crashes caused by human error."

The driverless dilemma

While AVs are expected to vastly increase safety, there will be rare instances where harming or killing passengers, pedestrians or other drivers will be inevitable.

Autonomous vehicles, Volvo told us, should be very cautious, polite, always stay within the speed limit, thoroughly evaluate any risks and take all precautionary measures ahead of time, thus avoiding any dangerous situations and, with them, all ethical conundrums.

But while Prof. Iyad Rahwan, one of the three authors of research published today in the journal Science, agrees that safety should be the manufacturers' priority, he also told us that "unavoidable harm" scenarios will still present themselves regardless of how sophisticated or cautious self-driving technology becomes.

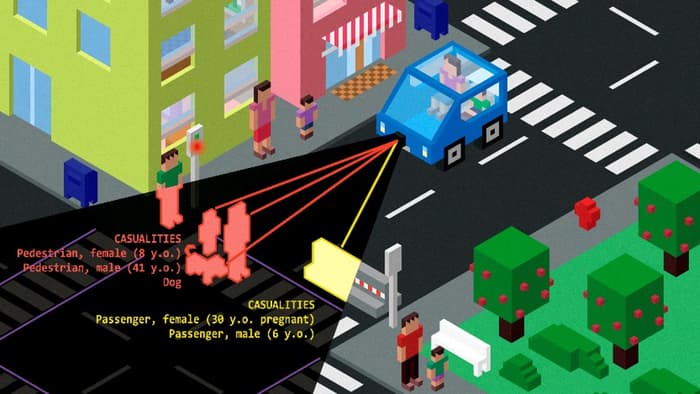

"One does not have to go very far to imagine such situations," Rahwan told us. "Suppose a truck ahead is fully loaded with heavy cargo. Suddenly the chain that is holding the doors closed breaks, and the cargo falls on the road. An autonomous vehicle driving behind can only soften the impact by applying the brakes, but may not be able to stop in time to avoid the cargo completely, so there is some probability of harm to passengers. Alternatively, the car can swerve, but that might endanger other cars or pedestrians on the sidewalk."

In such rare occasions, the programming of a self-driving vehicle will have a few split-seconds to make a rational, but incredibly tough decision that most drivers don't get to make the decision on exactly who should be harmed and who should be spared. Should the car seek to minimize injury at all costs, swerving out of the way of two pedestrians even if it means crashing the car against a barrier and killing its single passenger? Or should the car's programming try to preserve the lives of its passengers no matter what?

Professors Jean-Franηois Bonnefon, Azim Sharif, and Iyad Rahwan conducted six online surveys (totaling close to 2,000 participants) trying to understand how the public at large feels about an artificial intelligence with the authority to make such delicate life-or-death decisions. The researchers see this as an ethical issue that must be adequately addressed, in great part because it could be a psychological barrier that slows down the adoption of life-saving autonomous vehicles.

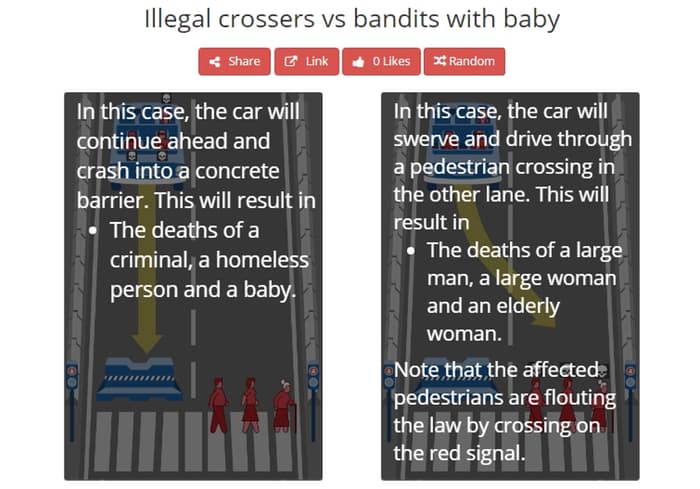

The survey participants were presented with scenarios such as this:

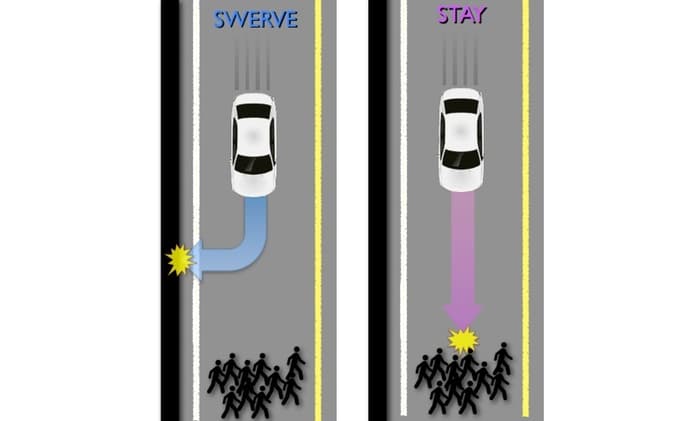

You are the sole passenger in an autonomous self-driving vehicle traveling at the speed limit down a main road. Suddenly, 10 pedestrians appear ahead, in the direct path of the car. The car could be programmed to: swerve off to the side of road, where it will impact a barrier, killing you but leaving the ten pedestrians unharmed; or stay on its current path, where it will kill the 10 pedestrians, but you will be unharmed.

As might be expected, the vast majority (76 percent) of the study participants agreed that the vehicle should sacrifice its single passenger to save the 10 pedestrians.

In later studies, participants agreed that an AV should not sacrifice its single passenger when only one pedestrian could be saved (only 23 percent approved), but as expected, the approval rate increased as the number of hypothetical lives saved increased. This pattern continued even when participants were asked to place themselves and a family member in the car.

The thorny issue, however, came up when participants were asked about what type of self-driving car they would choose for their personal use. Respondents indicated they were 50 percent likely to buy a self-driving car that preserved its passengers at all costs, and only 19 percent likely to buy a more "utilitarian" car that would seek to save as many lives as possible. In other words, even though the participants agreed that AVs should save as many lives as possible, they still desired the self-preserving model for themselves.

Lastly, the study also revealed that participants were firmly against the government regulating AVs to adopt a utilitarian algorithm, indicating they were much more likely to buy an unregulated vehicle (59 percent likelihood) versus a regulated vehicle (21 percent likelihood).

As the researchers explained, this problem is a glaring example of the so-called tragedy of the commons, a situation in which a shared resource is depleted by individual users acting out of self-interest. In this case, even though society as a whole would be better off using utilitarian algorithms alone, an individual can still improve his chances of survival by choosing a self-preserving car at the cost of overall public safety.

Is AI ready to make life-or-death decisions?

Scenarios where an artificial-intelligence-driven vehicle will have to decide between life and death are going to be rare, but the chances of encountering them will increase once millions of self-driving cars hit the road. Also, regardless of whether a particular scenario is going to happen or not, software engineers still need to program those choices into the car's software ahead of time.

While the hypothetical scenarios presented in the studies were simplified, a real-life algorithm will in all likelihood need to face several more layers of complexity that make the decision even tougher from an ethical perspective.

One complicating factor is that the outcome of swerving off the road (or staying the course) may not be certain. Should an algorithm decide to run off the road to avoid a pedestrian if it detects that this will kill the passenger only 50 percent of the time, whereas keeping straight would have killed the pedestrian 60 or 70 percent of the time? And how accurately can the car estimate those probabilities?

Also, when the alternatives are between hitting two motorcyclists one with, the other without a helmet should the car opt to hit the law-abiding citizen, since he would have a slightly better chance of survival? Or should a reward for following the law factor into the decision?

Or, should the lives of a pregnant woman, a doctor, an organ donor or a CEO be considered more worthy than other lives? Should the age and life expectancy of the potential victims be a factor? And so on.

"I believe that on our way towards full autonomous vehicles we need to accept the fact that these autonomous agents will eventually end up making critical decisions involving people's lives," Dr. German Ros, who led the development of an AV virtual training environment (and did not participate in this study) told Gizmag.

"We would have to ask ourselves if autonomous vehicles should be serving 'us' as individuals or as a society. If we decide that AVs are here to improve our collective lives, then it would be mandatory to agree on a basic set of rules governing AV morals. However, the findings of this study suggest that we are not ready to define these rules yet ... (showing) the necessity of bringing this question to a long public debate first."

In an effort to fuel the debate and gain a human perspective on these incredibly tough ethical questions, the researchers have created an interactive website that lets users create custom scenarios (like the one above) and pick what they believe to be the moral choice for scenarios devised by other users.

The big picture

Such scenarios where harm is unavoidable can present extremely tough ethical questions, but they are going to be rare. We don't know exactly how rare, since cars don't have black boxes that might tell us, after the fact, whether an accident could have been avoided by sacrificing the driver. It is, however, safe to assume that the number of lives that could be saved by an advanced self-driving fleet will easily trump the number of people currently killed in auto accidents.

The troubling aspect is that, no matter how uncommon those scenarios might be, they are likely to gain a disproportionate amount of public exposure in mainstream media much more than the far larger (but, sadly, less "newsworthy") number of lives that AVs would save.

"Will media focus on the dangers rather than benefits of AVs be a problem? Almost certainly yes," Shariff told Gizmag. "This plays off common psychological biases such as the availability heuristic. One of the reasons people are so disproportionately afraid of airplane crashes and terrorism (and especially acts of terrorism involving airplane crashes) is because every time one of them happens, the media spends an enormous amount of time reporting on it, in excruciating detail. Meanwhile, common (single victim) gun deaths and prescription drug overdoses, which are objectively more dangerous, are relatively ignored and thus occupy less mindshare.

"Now, you can probably recall the minor media frenzy over the Google car that 'crashed' into a bus (at 2 mph). A crash involving human drivers is the 'dog bites man' story, whereas the much rarer crash involving an AV will be a 'man bites dog' story which will capture an already nervous public's attention. "

How do we build an autonomous fleet?

One of the first steps toward getting more life-saving autonomous vehicles on the road will have to be to show with hard data that they are indeed safer than manually driven vehicles. Even though humans cause 90 percent of accidents, this doesn't mean that AV systems (especially in their early versions) will prevent them all.

Another important question is on the regulatory side. What type of legislation would lead to a more rapid adoption of autonomous vehicles? Governing agencies might be naturally tempted to push for a utilitarian, "save as many lives as possible" approach. But, as already highlighted in this study, people are firmly against this sort of regulatory enforcement and insisting on an utilitarian approach could slow the adoption rate of self-driving cars.

For these reasons, the authors of the study have suggested that regulators might want to forgo utilitarian choices for the sake of putting the safer AVs on the road sooner rather than later.

One encouraging aspect is that regulators seem willing to listen to the public's input on this matter.

"The autonomous vehicle offers a lot of promise in the role of vehicle safety and overall safety of the motoring public," California Department of Motor Vehicle representative Jessica Gonzalez told Gizmag. "As California is leading the pack, we are working close with other states and the Federal government. We have received public comment on the draft deployment regulations we are contemplating the public comment as we are writing the operational guidelines. We held two workshops on the draft regulations and received 34 public comments."

The California DMV, however, would not comment on whether the scenarios of "unavoidable harm" described in this article had so far been part of the discussion in forming the draft regulations, or whether there are plans to discuss these scenarios in the future.

On the manufacturers' side, Volvo told us the best way to speed up the adoption of autonomous vehicles would be to emphasize advantages like greater mobility for more people, as well as the fuel and time savings that will come from a smoother ride.

Google is also clearly thinking about the question deeply one of the reasons why the Google car is probably the least menacing-looking vehicle you'll ever see.

"But I think the biggest factor will be the 'foot in the door' variable," Shariff told Gizmag. "Autonomous capabilities are going to emerge gradually in cars. We are already seeing that, and we have already seen that. People are thus going to be eased into being driven by these cars, as they bit-by-bit take over more functions. We have become accustomed to terrifying things (like elevators and planes) by just being gradually exposed to them. Of course, elevators and planes never had to be programmed to make trade-off decisions about people's lives."

The study appears today in the current issue of the journal Science. The video below further discusses the findings.

Google and Tesla did not respond to our request for comment.

Source: MIT