Can AI detect homosexuality from a facial image? And should it?

A controversial study claims a person's sexual orientation can be identified with a high degree of accuracy from a single facial image(Credit: artoleshko/Depositphotos)

A study published late last week from two Stanford researchers has caused shockwaves around the world. The duo reportedly developed a neural network that could detect the sexual orientation of a person just by studying a single facial image. The startling degree of accuracy achieved by the algorithm has been questioned by some and accused as dangerous by others.

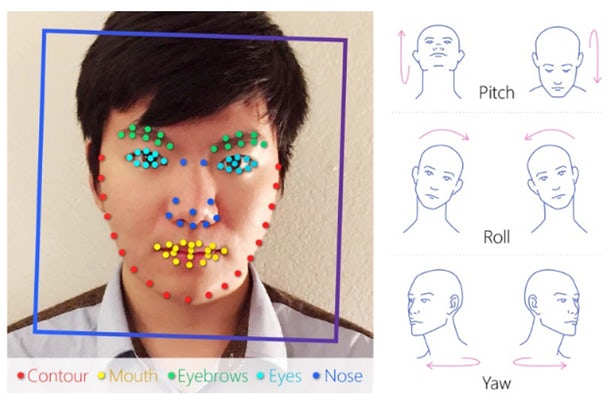

In the controversial study, the researchers trained a neural network on over 35,000 facial images, evenly split between gay and heterosexual. The algorithm tracked facial features that were not only transient (i.e grooming or expressions), but also examined traits that were fixed and perhaps genetically or hormonally founded.

One of the key hinges of the research was a reliance on what is called the prenatal hormone theory (PHT) of sexual orientation. The idea is that either over- or under-exposure in the womb to the key androgens responsible for sexual differentiation is a major driver for same-sex orientation in later life. The research also notes that these specific androgens affect key features of the face, meaning that certain facial characteristics can be linked to sexual orientation.

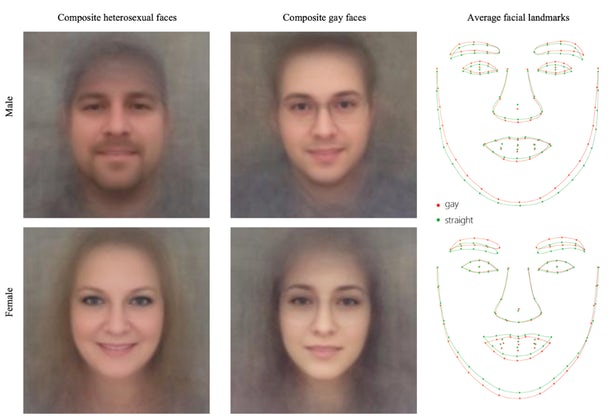

"Typically, [heterosexual] men have larger jaws, shorter noses, and smaller foreheads. Gay men, however, tended to have narrower jaws, longer noses, larger foreheads, and less facial hair," writes one of the authors of the study Michal Kosinski, in notes recently published defending his work. "Conversely, lesbians tended to have more masculine faces (larger jaws and smaller foreheads) than heterosexual women."

When presented with randomly selected pairs of images, featuring one homosexual man and one heterosexual man, the machine accurately picked the sexual orientation of each subject 81 percent of the time. This rose to 91 percent accuracy when presented with five images of the same person. For women the prediction rate was lower with a base of 71 percent accuracy from one image, rising to 83 percent with five images.

The authors of the study seemed to understand the problematic nature of releasing research such as this. In their extensive additional notes they claim the study is intended to make people aware of the potential powers that current visual algorithmic tools have achieved.

"We were really disturbed by these results and spent much time considering whether they should be made public at all," writes Kosinski. "We did not want to enable the very risks that we are warning against."

LGBTQ advocacy organization GLADD and the Human Rights Campaign (HRC) immediately went on the offensive following the study's public outing. Questions were raised over the study's methodology, including the limited scope of the data – all subjects were white, images were plucked from US dating sites, and no distinctions were made between sexual orientation, sexual activity or bisexual identity.

"Technology cannot identify someone's sexual orientation," says GLADD's Chief Digital Officer, Jim Halloran. "What their technology can recognize is a pattern that found a small subset of out white gay and lesbian people on dating sites who look similar. Those two findings should not be conflated."

The consequences of this kind of research becoming officially applied for nefarious means has concerned many. Just earlier this year horrific stories started to come out of the conservative Russian republic Chechnya. Gay men were apparently being rounded up by authorities, sent to prison camps, beaten and sometimes killed.

Regardless of the accuracy of the actual science at hand, questions of moral responsibility were being asked of the researchers. Why was this even being studied in the first place? Simply to prove a point?

"Imagine for a moment the potential consequences if this flawed research were used to support a brutal regime's efforts to identify and/or persecute people they believed to be gay," says HRC Director of Public Education and Research, Ashland Johnson. "Stanford should distance itself from such junk science rather than lending its name and credibility to research that is dangerously flawed and leaves the world – and this case, millions of people's lives – worse and less safe than before."

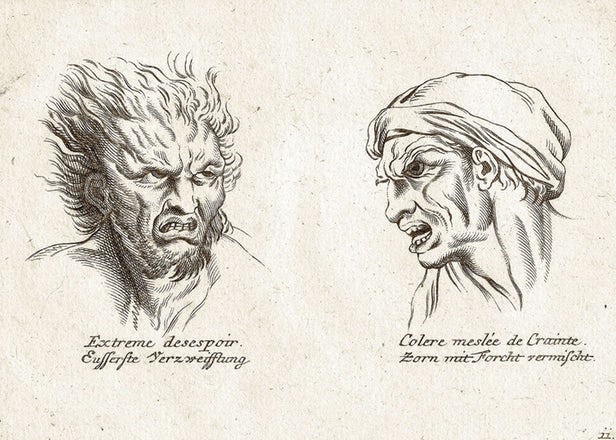

There has undeniably been a recurrence in the previously derided field of physiognomy. This idea, circulating for hundreds of years, is that personality traits and characteristics can be identified through the study of physical features. The theory, alongside phrenology, was often used to justify horrifically racist or prejudicial behavior, under the veil of objective science.

More recently, the field has undergone a small revival underpinned by new genetic understandings and computational advances. In publishing the paper Kosinski defends some of the classic physiognomist claims by making the distinction that, while historically people were not successful at accurately judging others based just on facial features, this doesn't mean that those physical traits were not actually present. The implication is that we simply didn't have the technology to accurately process such micro facial distinctions.

"The fact that physiognomists were wrong about many things does not automatically invalidate all of their claims," writes Kosinski. "The same studies that prove that people cannot accurately do what physiognomists claimed was possible consistently show that they were, nevertheless, better than chance. Thus, physiognomists' main claim – that the character is to some extent displayed on one's face – seems to be correct (while being rather upsetting)."

The science is certainly not as clear cut as Kosinski and his research partner are implying. PHT is generally considered just one of many possible factors that could determine a person's ultimate sexual orientation, and hinging an entire facial recognition system on it is questionable to say the least.

The other, less fixed measures that are tracked by the algorithm seem rather arbitrary and significantly culturally mandated. One note in the study claims that according to the research, lesbians smiled less than their heterosexual counterparts, had darker hair and used less eye makeup.

But regardless of the debatable science, this study raises a significant question about the moral responsibility of the scientist. Is it enough to simply say, as the researchers literally have, "we studied an existing technology – one widely used by companies and governments – to understand the privacy risks it poses."

Is that enough of a justifiable outcome for research that could have incredibly damaging pragmatic results? After all, the study presupposes its underlying assumption that sexual orientation can be clearly tracked through facial characteristics. That presupposition is implied as not even up for debate in the study. That in itself is a dangerous, and potentially irresponsible outcome.